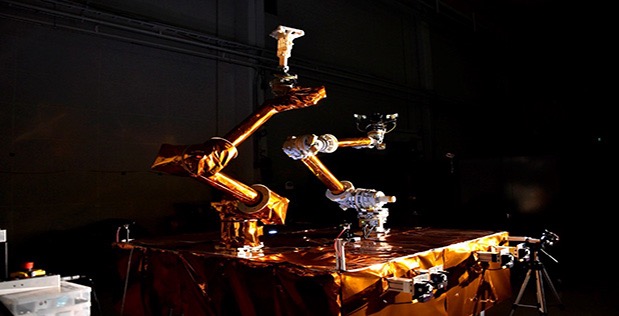

Whenever robotics are discussed, we see fascinating pictures of the hardware—hands, joints, humanoids, and so on. My recent articlein Room, the European Space Journal, is no exception. But here’s an important thing to think about: how is that hardware CONTROLLED? That’s a lot harder to put into an image.

In the special case of space robotics, control is approached differently depending on the system. The Space Station Remote Manipulator System, or Canadarm 2, is controlled by humans—either the astronauts on the International Space Station or ground controllers. But other key space robotics experiments have incorporated automation. JAXA’s Experimental Test Satellite in 1997, DARPA’s Orbital Express in 2007, and others, have incorporated some degree of automated, software-guided control.

Why can’t we just control a space robot arm with a joystick operated on the ground? The primary reason is the time delay in radio signals traveling to and from the satellite. Imagine that the robot is trying to dock two satellites together, or install a module on a client satellite. The two satellites, the one with the robotics and the client, are moving relative to one another. If there is too much delay between the command being sent and the arm’s movement, the errors could cause an unwanted contact that sends the client satellite tumbling.

The time delay works the other way, too. Suppose the Earth-based operator is using TV images to decide where to guide the robotic arm. Those images are also delayed. So the operator will actually be telling the arm to go to the wrong place.

It takes a radio signal from Earth only an eighth of a second to reach geosynchronous orbit. But time delays come from other sources than just the speed of light. Ground networks, compression, encryption, and other data handling increase the delays—possibly to several seconds! It’s obvious that this would make controlling two objects moving with respect to one another very difficult.

Instead, consider another approach: have the satellite with the robotic arm control the robot locally. Then there is no time delay. It also solves the problem of intermittent communications. Local control software can even react to unexpected events, such as the client satellite firing its thrusters, and safely withdraw—far faster and more safely than waiting for a ground-based operator to react.

Today’s servicer satellite projects are all taking this approach. DARPA’s Robotic Servicing of Geosynchronous Satellites, Northrop Grumman’s Mission Extension Vehicle, and NASA’s Restore-L, will all have local control software for safe, rapid operations and response to the unexpected.

This software has many tasks: cameras and LIDARs image the client satellite, determining its range and pose; the docking feature is tracked and monitored; the robotics and satellite position are controlled in real time; and all the while, systems are being checked for nominal operations and automated responses to anomalies are in readiness.

With such complexity, the need for careful software testing becomes critical. A recent article in The Atlanticraises the spectre of software becoming so complex that verifying it is nearly impossible—and well-meaning programmers adding features with unintended consequences.

There is a great historical example of this. In 2005, NASA flew a mission called Demonstration of Autonomous Rendezvous Technologies (DART). The satellite was fully on its own. Part of the software called for the satellite to fly through an imaginary “window” in space, where it would slow down and change its operating mode. But no one asked the question: “What if the satellite misses the window?”As the DART accident reportshows, the satellite did in fact miss the window, and cause a collision.

Space robotics developers must certainly be aware of these possibilities. Software must be kept as simple as possible, limiting its tasks to the key operations required for safe operations. Its logic must be verified multiple times, by independent reviewers, to catch any logical errors. After all, computers only do what they are told.

Software increases the safety of space robotics. But its development becomes a key safety item. The “invisible hand” has to be well understood and thoroughly tested in order that robots achieve their potential for safe, reliable, efficient and useful tasks in space.

Recent Comments